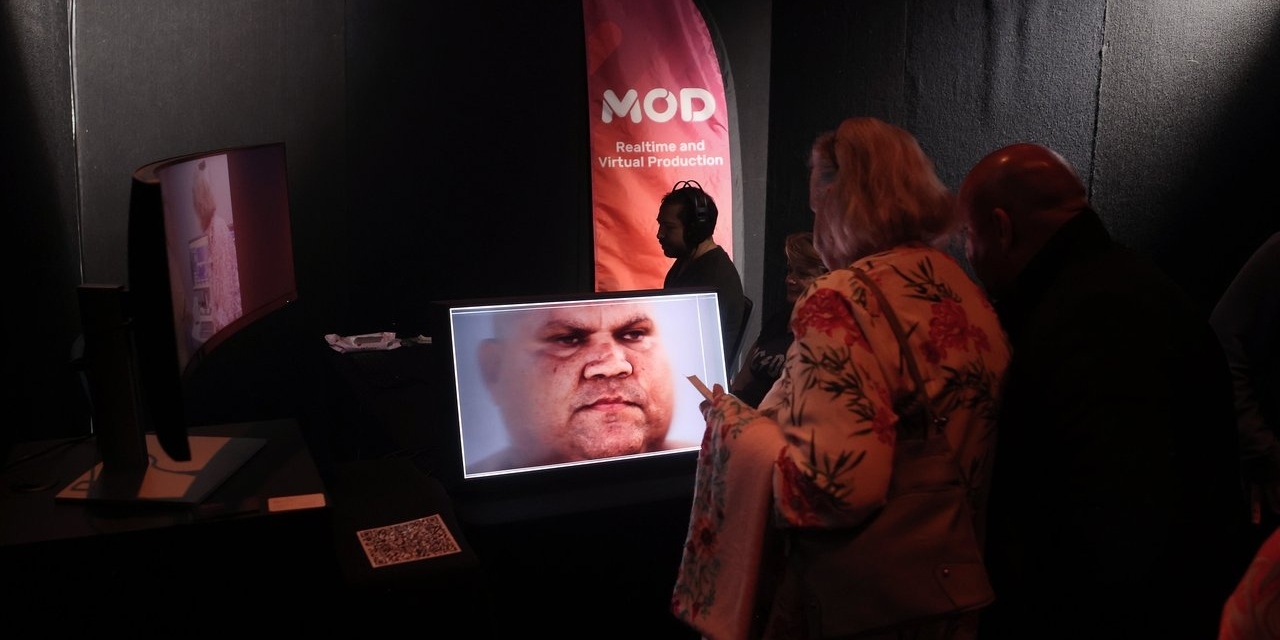

3D Holograms may sound like something you’d only see in a sci-fi movie, but guests at 2023’s SXSW Sydney were treated to a rare real-life holographic experience thanks to Australian realtime and virtual production studio Mod.

The project came about after Mod’s team were engaged by the Australian Film, Television and Radio School (AFTRS) to collaborate with First Nations Elder Nicholas Thompson-Wymarra, with the aim to record his stories in full 3D for the first time.

Completed thanks to the Netflix Indigenous Scholarship Fund, the project allowed Mod to trial and compare cutting edge realtime and virtual production techniques for volumetric and motion capture, providing new opportunities for recording cultural heritage. Passionate about sharing his country and culture, Nick states, “Our Gudang Yadhaykenu Nation Land, Sea and Air is located on the Cape York Peninsula, Top Of Australia. IPI Dreaming with Rich Ecosystem.”

Here is an overview of the key steps undertaken to capture these 3D avatars:

Scoping

Over several months Mod’s team listened to Gudang Yadhaykenu traditional owners, researching and testing methods for how to best achieve their aims for the project. The final scoping report laid out a roadmap for an intensive one-day performance session, with the goal of capturing a five minute 3D story as the final product.

Recognising the importance of the project, Mod ended up delivering three stories, totalling 16 minutes of content.

3D Modelling

On production day Mod teamed up with Avatar Factory to create a full body scan of Thompson-Wymarra using a 172 camera rig, resulting in a photogrammetry based 3D model of the subject.

This model was later used within the Unreal Engine Mesh to Metahuman pipeline to create a rigged character that was customised in Thompson-Wymarra’s likeness.

Volumetric Video Capture

Thompson-Wymarra’s performances for the holographic stories took place at The Electric Lens Co’s studio using a seven camera volumetric-video capture array. These performance recordings provided both high quality geometry cache animation and video textures, suitable for use in Unreal Engine.

Machine learning processes were used to deliver the final sequence including:

- 3D scan processing

- Marker removal

- Voice isolation

- Transcripts

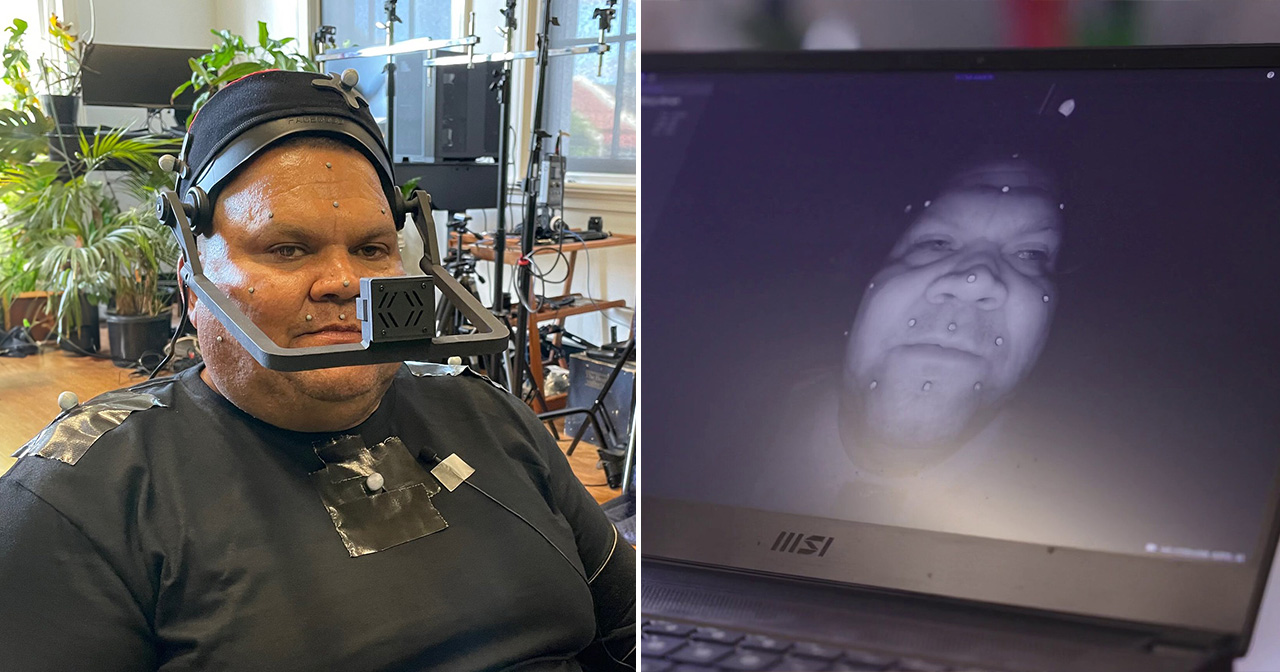

Motion Capture

Mod used Electric Lens Co’s OptiTrack full body motion capture system – comprising 13 cameras and a suit – together with a FaceGood Head Mounted Camera (HMC) to capture facial motion. OptiTrack markers were placed on Nick’s face to to assist in the tracking process.

App Development

An Unreal Engine app was built for viewing the scans on traditional desktop or holographic displays.

For the holographic displays Mod utilised two Looking Glass Factory models:

- Looking Glass Factory Portrait (8″) holographic display

- Looking Glass Factory 32’’ holographic display

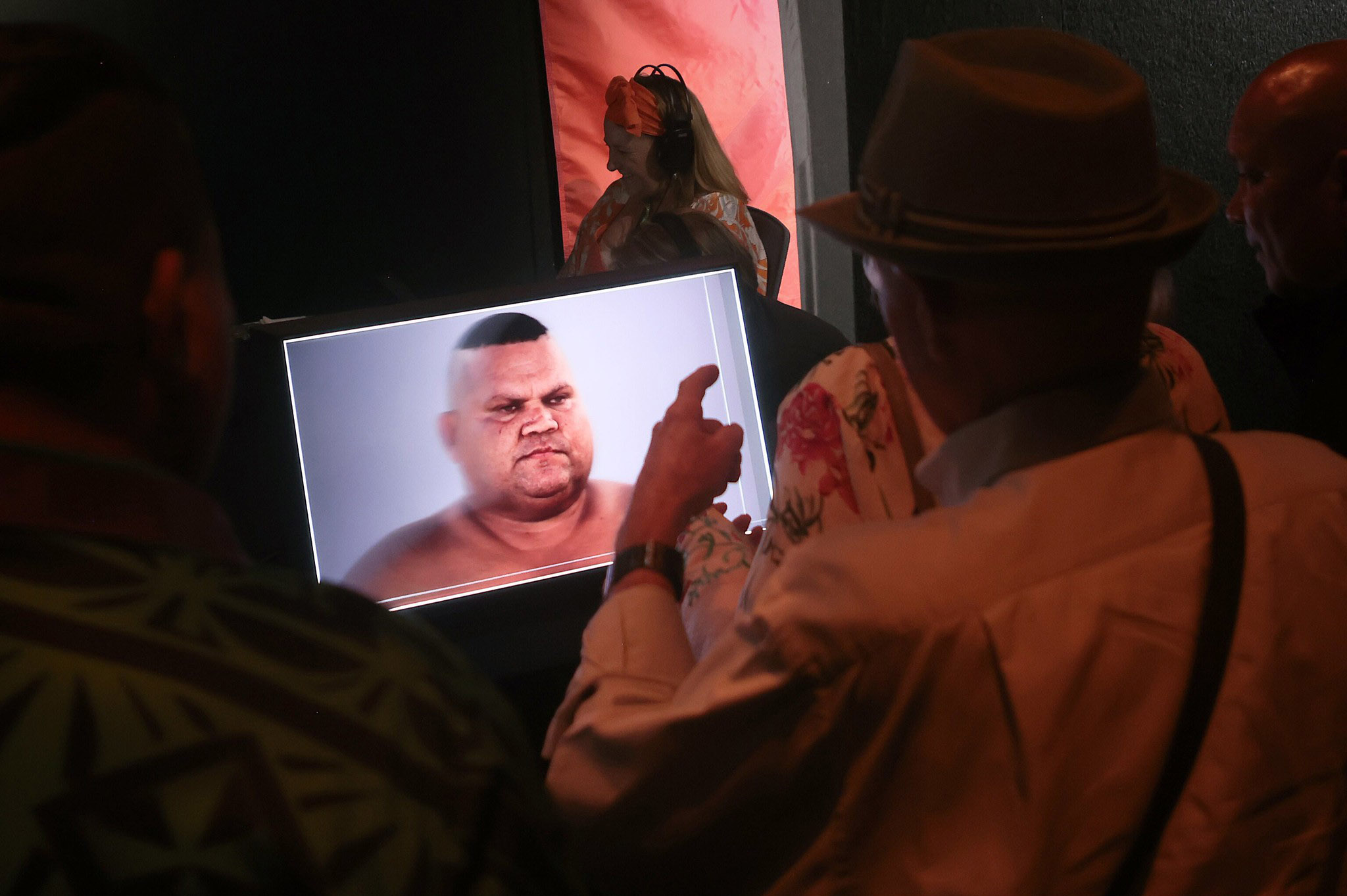

The project was unveiled at the inaugural SXSW Sydney.

Key Findings

The project provided all stakeholders with valuable experience in the various trade-offs between two different products: Volumetric video of characters (below left) vs ‘digital human’ rigged characters (below right).

Each has its unique uses and benefits.

Volumetric video (based on live action cameras) can look incredibly life-like – hence its use in film/TV VFX – but it requires significant amounts of camera footage and processing. Any revised or new performance requires another multi-camera live action shoot, and processing of large amounts of new data. The fine detail captured of the subject’s face was possible due to his almost completely static pose on-set. This technique does not lend itself to more dynamic performances without losing fidelity – as the cameras would need to be further back.

A 3D rigged character by contrast – in this case using a custom Epic Games MetaHuman workflow – is essentially a puppet that is readily re-purposed and usable for any new performances captured. That flexibility comes with significant up-front cost to build an avatar with a photorealistic likeness (a labour intensive manual process) and character rig functionality to support the delivery of any performance.

The cost of producing a general purpose puppet capable of delivering any performance of equal fidelity to a video-based likeness is often prohibitive compared to what can be produced to work for a single shot or performance.

Michela Ledwidge – Mod

Digital human character processes are evolving fast. For this pilot Mod focused on producing a first iteration rigged character bust (not a full body) that let their team identify key issues in the MetaHuman pipeline for further development. These include support for larger body types in the custom MetaHuman process, custom hair groom, blood flow maps and muscle animation.

Mod’s team say they were honoured to have had this opportunity to listen and learn from Gudang Yadhaykenu people about their culture, and their long-term vision for utilising new technologies within a Gudang/Yadhaykenu Smart City framework. The Mod team hopes their collaboration on new processes can help empower storytellers, stating they would love to see more First Nations-led virtual production in Australia.

You can see more photos from the project on Flickr.

Credits

AFTRS in collaboration with Mod, in collaboration with Gudang Yadhayhenu Tribal Governance Council, and supported by the NETFLIX INDIGENOUS SCHOLARSHIP FUND, present

Through The Eyes of Our Ancestors

Featuring

Nicholas Thompson-Wymarra

Mod

Director / Producer – Michela Ledwidge

Producer – Mish Sparks

Virtual Production Generalist – Sarah Cashman

Senior Developer – Isaac Cooper

Developer – Anthony Johansen-Barr

Stand-in – Tim Gray

BTS Videographer – Aaron Cheater

AFTRS

Director, First Nations and Outreach – Romaine Moreton

Production Manager – Sue Elphinstone

First Nations Community Engagement Manager – George Coles

Gudang Yadhaykenu Tribal Governance Council

Alex Wymarra

Edgar Wymarra

Elizabeth Wymarra

Nicolas Thompson Wymarra

Bryan Wymarra

Elder Aunty Jennifer Jill Wymarra

Elder Aunty Hazel Wymarra

Electric Lens Co

Volumetric Video & Motion Capture – Matt Hermans

Avatar Factory

Scanning Supervisor – Mark Ruff

Scanning Producer – Kate Ruff

Scanning Wrangler – Chloe Ruff

Thanks

NEP – Nigel Simpson

Looking Glass Factory – Caleb Johnston

Filmed and created on Gadigal Bidjigal Country.